The advent of large language models (LLMs) has led to many breakthroughs in the field of Natural Language Processing (NLP), and its effects on the world at large have been transformative for organizations in most every industry.

However, the massive size of LLMs—hundreds of billions of parameters, for example—and their generic nature (due to the content on which they are trained) makes LLMs expensive to operate and often impractical for the domain-specific functionalities that organizations depend on in their day-to-day operations.

At expert.ai, we know that organizations are looking for solutions that are practical and effective, in addition to being cutting edge. When working with LLMs, reducing the computational cost is always a key area of focus. Our research teams have been investigating ways to work with these models that can be much more cost effective.

One of the results of this research is “Multi-word Tokenization for Sequence Compression,” the second of two papers authored by expert.ai researchers presented at the annual Conference on Empirical Methods in Natural Language Processing (EMNLP) that took place December 6-10 in Singapore.

Addressing the Way LLMs Approach Tokenization

Despite their successes, LLMs like GPT possess hundreds of billions of parameters that entail enormous computational cost, by design. Researchers have been looking for ways to take advantage of such models without incurring this magnitude of costs long before the release of the public unveiling of LLMs via ChatGPT one year ago.

Language Model compression techniques aim to reduce computational costs while retaining performance. Methods such as knowledge distillation, pruning and quantization have focused on creating lighter models either by shrinking the architectural size or by reducing the number of FLOPs (floating point operations per second, a measure of computer performance). More recently, carefully designed inputs or prompts have been shown to produce impressive performance. However, the trend of designing longer and longer prompts to achieve this has also led to a significant rise in computational cost.

So, what is another aspect that we can look to for reducing computational costs?

In techniques that deal with text, such as natural language processing (NLP), one of the first processes that must happen is that the text must be cleaned before it can be used for further analysis. Tokenization can be used to accomplish this by breaking down the raw text into single words, small groups of words or symbols, referred to as tokens. These tokens will then be used to understand the meaning of a text. Tokenization is a necessary step in the feeding of textual data to a LLM.

So, how does tokenization impact model compression?

- Tokenizers split words more often on languages other than English.

- Shorter sequences are faster to process and require less computations.

- Domain-specific tokenizers produce compact and meaningful inputs that reduce computational costs, without harming performance.

Retraining the Tokenizer Layer

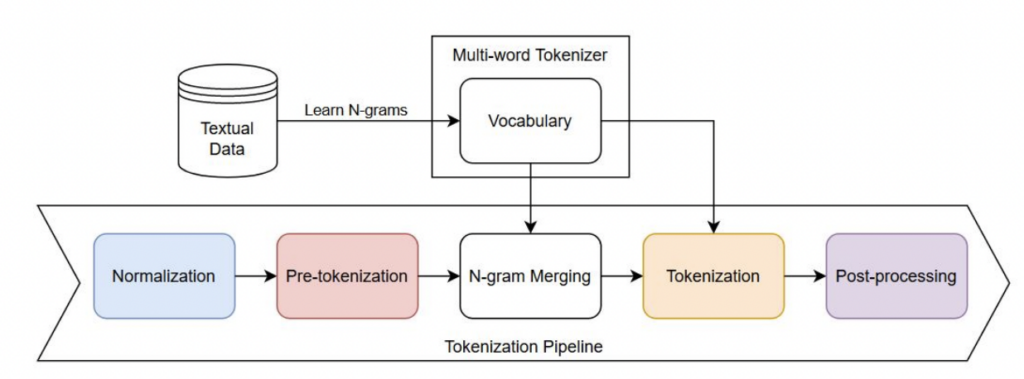

Our research directly aims at the heart of this problem: we focus on retraining the tokenizer layer and finding a smart way to initialize the embeddings of the new tokens in the pre-trained language model. In particular, we extend the vocabulary with multi-word tokens. In such a way, we obtain produce a more compact and efficient tokenization that yields two benefits:

Increase in performance due to a greater coverage of input data given a fixed sequence length budget

Faster and lighter inference due to the ability to reduce the sequence length with negligible drops in performance.

Multi-word tokenizer pipeline

In our paper, we compress sequences by adding the most frequent multi-word expressions to the tokenizer. We conducted an investigation on three different datasets by evaluating each model in conjunction with other compression methods.

Our approach is shown to be highly robust for shorter sequence lengths, thus yielding a more than 4x reduction in computational cost with negligible drops in performance. In the future, we expect to extend our analysis to other language models and tasks, such as language generation in the scope of sequence compression.

Explore the full research in our paper: Multi-word Tokenization for Sequence Compression.