Artificial intelligence (AI) has become a red-hot topic with record levels of investment in AI companies and promises of capabilities that will revolutionize our lives.

According to the McKinsey’s latest state of AI report, the rate of AI adoption has continued to grow as 57% of companies now claim to use AI in at least one business function, up from 45% in 2020.

Analysts at Gartner project that by 2025, 70% of organizations will have operationalized artificial intelligence architectures, prompted by the technology’s rapid maturity. That is echoed by IDC’s report of growing AI investment across industries to improve customer insight, employee efficiency and accelerate innovation. Spending on AI systems is expected to grow from $85.3 billion in 2021 to more than $204 billion in 2025.

Given the continued hype around AI, we looked back on 2021 to identify the most debated AI topics and make a few forecasts into 2022.

Investigating AI with AI

The following Expert IQ Report has been developed by expert.ai using its proprietary natural language understanding capabilities to analyze a sample of 2,738 articles concerning artificial intelligence (AI). These articles were published on industry outlets and consulting firms’ websites between January 2021 and January 2022. Sources include but are not limited to Venture Beat – The Machine, The AI Journal, AiThority, The Media AI Journal, MIT Technology Review and McKinsey.

2021: The Year of Data

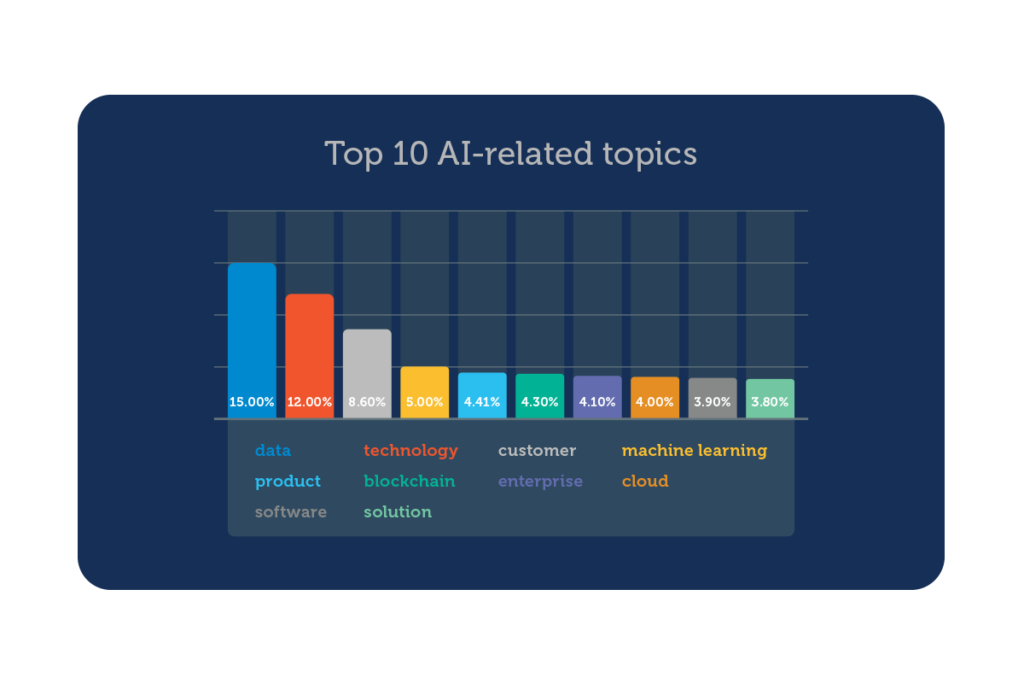

Data was the most discussed topic (15%) across our sample of articles, followed by technology (12%), customer (8.6%), machine learning (5%) and product (4.41%).

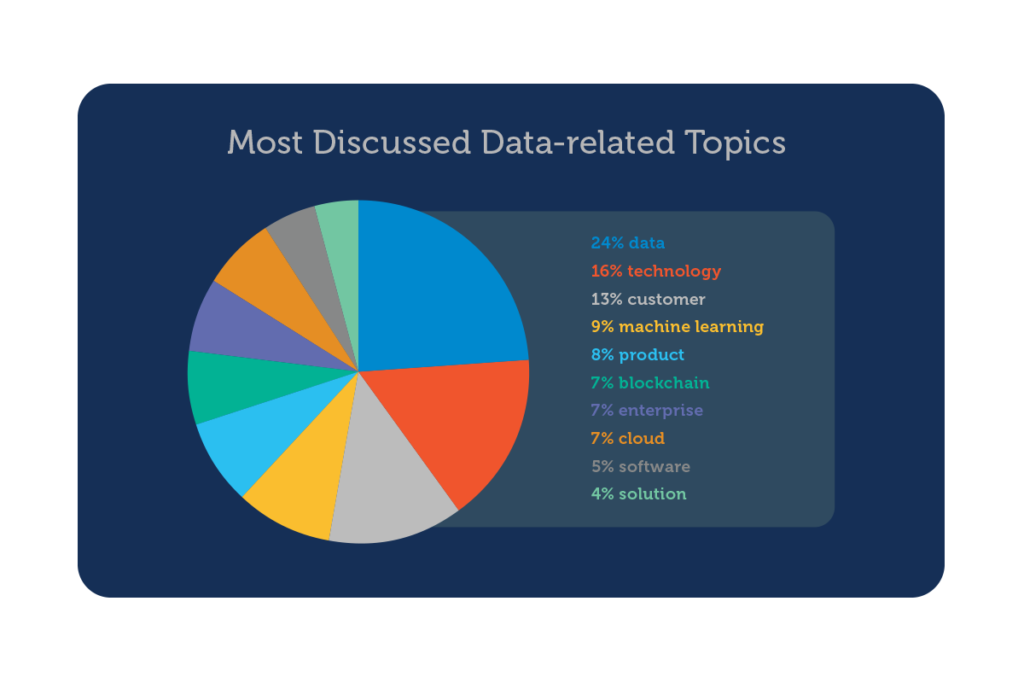

Last year was also a big one for the proliferation of AI models. Digging deeper into the articles about data, AI models were mentioned in about 24% of articles. Machine learning emerges as the most discussed AI approach (16%), and data science (13%), big data (9%), customer (8%) and analytics (8.21%) were the most frequently cited subtopics.

Training (17%), testing (3.72%) and labeling (2.44%) were the most discussed subtopics related to AI models.

According to Will Douglas Heaven from MIT Technology Review, an AI model like GPT-3 was monstrous in scale—larger than any other neural network ever built. It kicked off a whole new trend in AI; one in which bigger is better. But how big is too big? We asked ourselves this question in 2021 as we summarized GPT-3 issues in commonsense terms that left business leaders with some practical advice to consider when evaluating AI solutions.

As a matter of fact, much of the work in delivering real-world solutions rests on ensuring the data sets are large enough and representative enough to capture the information that a subject matter expert recognizes only after years of experience and training.

In many cases, such a large volume of training data is not available. Even if it is, training a model is extremely energy intensive. To train a language model that uses a transformer (213M parameters) with neural architecture search, it would cost roughly $1-3 million and generate the equivalent of 626,155 pounds (284 metric tons) of carbon dioxide. That equates to the lifetime output of five average American cars.

Despite the clear evidence that training AI models is very energy intensive, only 3.7% of articles have a preferred area of focus related to CO2 emissions from AI and the carbon footprint of AI. Not much has been discussed about the issue of environmental sustainability (2.3%).

The State of AI for Language Understanding

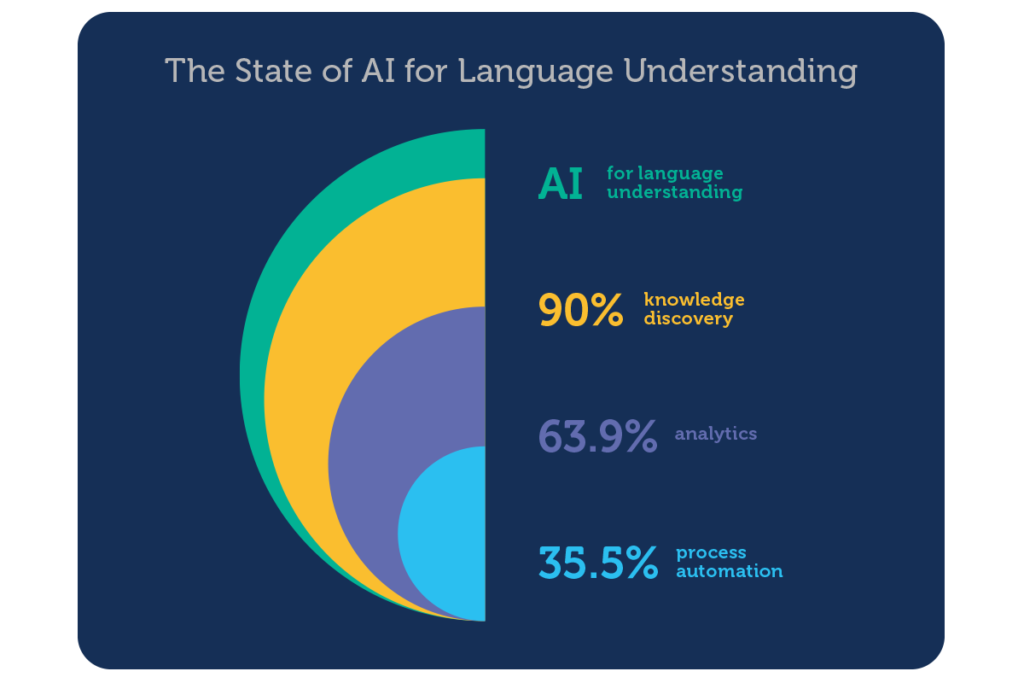

Of the entire sample, 6.17% of articles were related to AI applied to natural language (NL). With that said, three macro use cases dominated the AI innovation conversation for enterprise language data: knowledge discovery/ intelligence (90%), analytics (63.90%) and process automation (35.50%).

The Top Five AI-related Industries

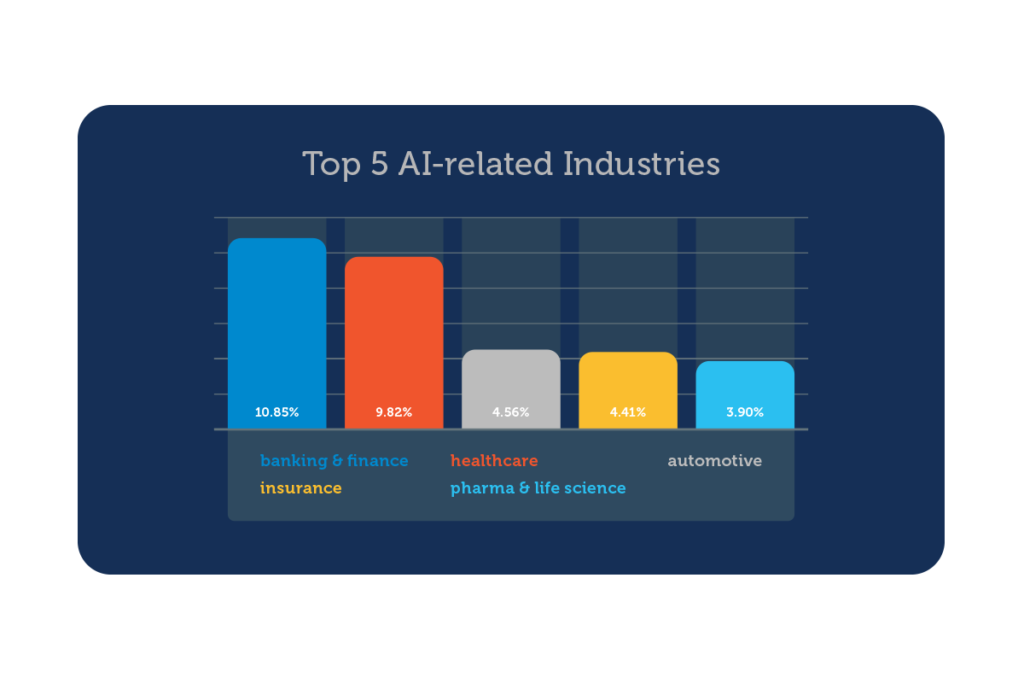

Banking and finance was the most discussed industry in 2021 (10.85%), followed by healthcare (9.82%), automotive (4.56%), insurance (4.41%) and pharma and life science (3.9%).

2022: The Year of Language

Data took the center stage in 2021. It will only become more magnified, especially with regards to unstructured data, which according to IDC, is growing in volume by more than 50% every year, and by 2025 it will form as much as 80% of all data.

Unstructured data is typically expressed in the form of language. And you can expect more of everything related to language data in 2022, from email to social media posts to digital business files.

As data grows, signals of natural language-related innovations emerge to effectively manage it. If our findings on this topic are any indication, the drive toward intelligent task automation (35.50% of NL-related articles), analytics (63.90%) and knowledge discovery (90%) will necessitate the use of advanced NL capabilities and innovative platforms purpose-built for the unique complexity of unstructured language data.

Based on that, here are our predictions:

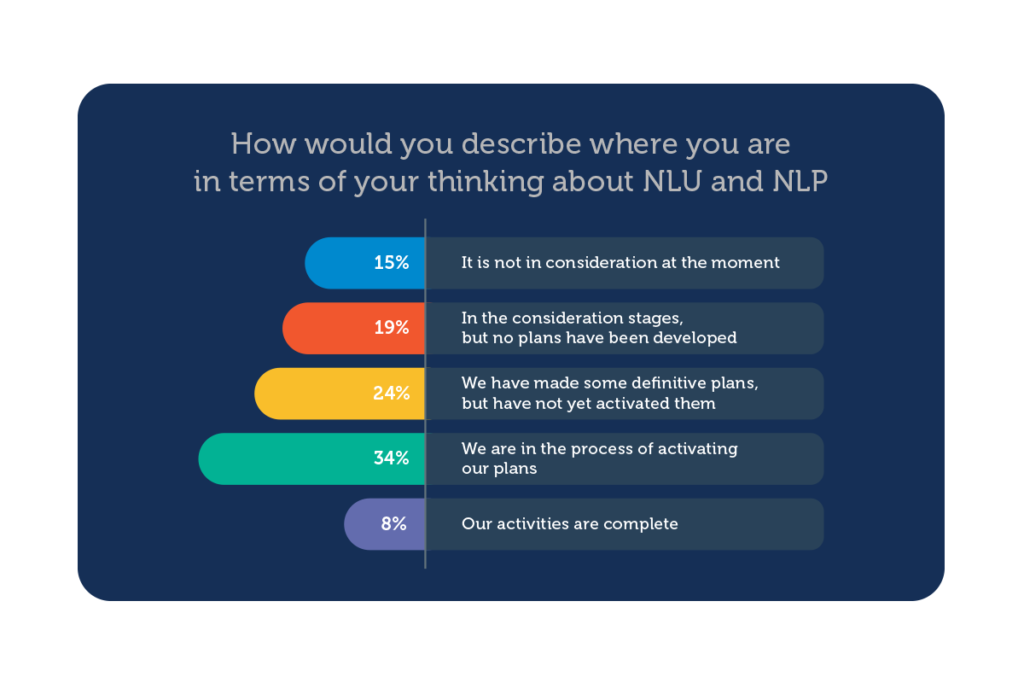

- Natural language-enabled enterprise. The year 2022 will be the year of the natural language-enabled enterprise. In fact, according to a recent report by The AI Journal, 34% of enterprises are in the process of activating NL-based plans while 43% are either making plans or considering their options. AI will help organizations understand the context, meaning and relationships in language. This will enable the enterprise to transform language into data, resulting in better decision making.

- Human-in-the-loop. Technologies so smart that they can learn and somehow manage themselves autonomously do not yet exist. Enterprises are finally beginning to understand that you need a human in the loop. The human-in-the-loop approach organizes your processes in a way that humans can always add value that only they can offer.

- Hybrid AI. “The days of singular AI techniques are coming to an end,” according to Gartner. The introduction of composite AI or hybrid AI techniques, even within existing products, will have a profound impact on their capabilities. What that can be characterized as a “hybrid AI approach” or “composite AI approach” is based on the principle that no single technique is a fit for every project. A “best-of-both-worlds” scenario that pairs machine learning and symbolic reasoning will continue to be socialized and gain steam.

- Responsible, explainable AI. To leverage AI to its full potential, organizations must demonstrate they are utilizing it in a respectful, responsible manner. The best way to accomplish this is to build as much transparency into the algorithms that drive AI processes without hampering performance or accuracy.

- Accelerating accessibility of ESG data. One of the most relevant lessons learned in 2021 is that Large Language Models are riddled with concerns about bias and energy consumption. These concerns could easily be mitigated by leveraging different AI approaches, such as symbolic AI, which can ensure full understanding of your data. Understanding data is the first step toward governing it, so this is essential to improving the quality of ESG data management for better decision making.